主讲人 Speaker:Weijie Su (University of Pennsylvania)

时间 Time:Wed., 14:00-15:00, July 5, 2023

地点 Venue:Lecture Hall, Floor 3, Jin Chun Yuan West Bldg.; Zoom Meeting ID: 271 534 5558 Passcode: YMSC

课程日期:2023-07-05

Abstract:

Privacy-preserving data analysis has been put on a firm mathematical foundation since the introduction of differential privacy (DP) in 2006. This privacy definition, however, has some well-known weaknesses: notably, it does not tightly handle composition. In this talk, we propose a relaxation of DP that we term "f-DP", which has a number of appealing properties and avoids some of the difficulties associated with prior relaxations. This relaxation allows for lossless reasoning about composition and post-processing, and notably, a direct way to analyze privacy amplification by subsampling. We define a canonical single-parameter family of definitions within our class that is termed "Gaussian Differential Privacy", based on hypothesis testing of two shifted normal distributions. We prove that this family is focal to f-DP by introducing a central limit theorem, which shows that the privacy guarantees of any hypothesis-testing based definition of privacy converge to Gaussian differential privacy in the limit under composition. From a non-asymptotic standpoint, we introduce the Edgeworth Accountant, an analytical approach to compose $f$-DP guarantees of private algorithms. Finally, we demonstrate the use of the tools we develop by giving an improved analysis of the privacy guarantees of noisy stochastic gradient descent.

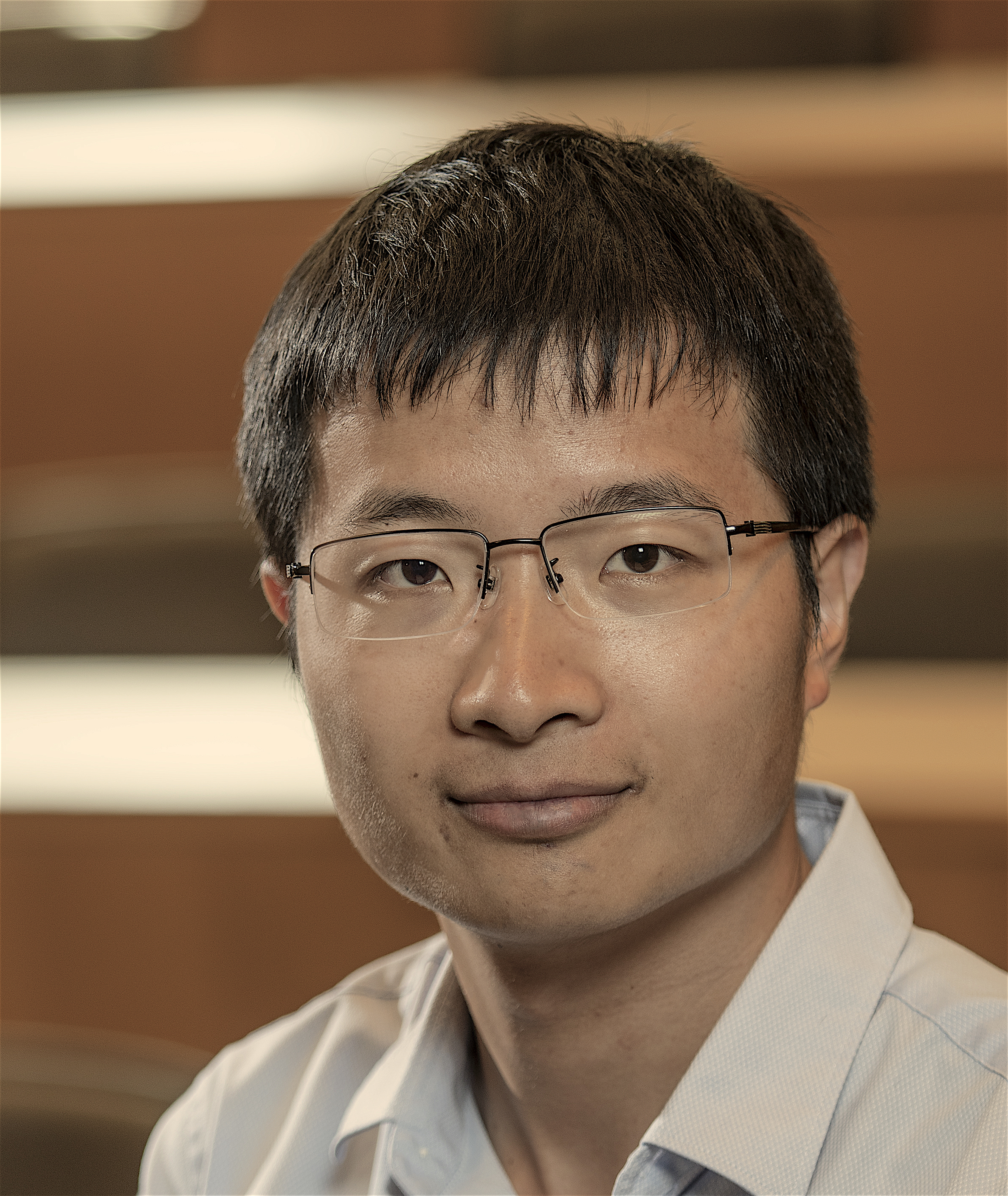

Bio:

Weijie Su is an Associate Professor at the Wharton School, University of Pennsylvania, with secondary appointments in the Department of Mathematics and Department of Computer and Information Science. He is a co-director of Penn Research in Machine Learning Center. Prior to joining Penn, he received his Ph.D. degree from Stanford University under the supervision of Emmanuel Candes in 2016 and his bachelor’s degree in mathematics from Peking University in 2011. His research interests span deep learning theory, large language models, privacy-preserving data analysis, mathematical optimization, mechanism design, and high-dimensional statistics. He is a recipient of two gold medals in the inaugural S.-T. Yau College Student Mathematics Contest in 2010, the Stanford Theodore Anderson Dissertation Award in 2016, an NSF CAREER Award in 2019, an Alfred Sloan Research Fellowship in 2020, the SIAM Early Career Prize in Data Science in 2022, and the IMS Peter Gavin Hall Prize in 2022.

Video:http://archive.ymsc.tsinghua.edu.cn/pacm_lecture?html=Gaussian_Differential_Privacy.html